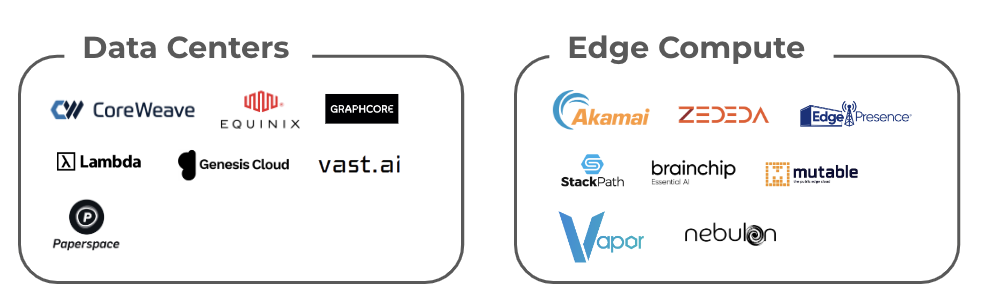

Intelligent Placement of Compute: Data Center Innovations and Edge Compute

Compute refers to the processing power of a computer or system to execute tasks and run applications. Its components include CPUs, GPUs, TPUs, NPUs, and other processors that perform calculations and operations. Use cases range from basic tasks (e.g., word processing) to advanced workloads (e.g., AI model training, simulations). Compute is at the core of all digital applications, enabling data processing, analytics, and decision-making. Compute can take place locally (on an edge device) and remotely (in the cloud). In a distributed model, both of these mechanisms are engaged.

For instance, when a user asks their Siri, “What is the weather today?”, the smart speaker (edge device) processes the voice command locally using a low-power NPU (Neural Processing Unit) to recognize the speech. Initial processing happens here for speed and privacy. Then, the processed command is sent to a cloud server in a data center, where high-performance CPUs/GPUs handle natural language processing to interpret the question. The system queries weather databases and computes the response. The computed answer is sent back to the smart speaker, which uses its onboard chip to convert the text-based response into speech and deliver it to the user.

As modeled in the example above, centralized systems, such as data centers, are designed for massive data throughput and high-performance workloads, while decentralized systems, like edge devices, prioritize low-latency responsiveness and localized computation. Traditionally, these architectures operated in isolation, each optimized for distinct use cases. However, by designing semiconductors to enable a distributed compute continuum, modern technology has blurred the lines between centralized and decentralized processing.

Merging data center and edge computing into a unified framework allows workloads to be dynamically managed based on location and application requirements. Here are the different components of the merge and how they interact conceptually:

- Data Center Compute

- Edge Compute

- Interconnectivity Across the Continuum

- Dynamic Workload Management

1. Data Center Compute

At the core of distributed compute ecosystems are high-performance chips like GPUs, TPUs, and custom ASICs. These semiconductors live in data centers and handle massive parallel workloads, such as training AI models or processing large datasets, providing centralized power for compute-intensive tasks. Advanced interconnect technologies like NVLink and CXL facilitate high-speed data sharing across these systems, ensuring efficient scaling within data centers.

Data Centers are centralized facilities optimized for large-scale batch processing, analytics, and storage, often located far from end-users. Some advantages they offer include:

- Scalability: Data centers can rapidly scale up or down to meet fluctuating computational demands. This flexibility makes them ideal for businesses handling seasonal spikes or unpredictable workloads, such as e-commerce platforms during holiday sales or AI training during iterative development cycles.

- Cost-Efficiency: By centralizing resources, data centers reduce the per-unit cost of compute, storage, and networking. Companies avoid the capital expenditures of building their infrastructure, instead opting for a pay-as-you-go model from providers such as AWS or CoreWeave.

- High-Density Computing: Data centers are optimized for running high-density compute tasks, such as batch processing, big data analytics, and large-scale AI model training. They can host powerful GPUs, TPUs, and other accelerators in clusters to handle workloads at unparalleled speeds.

- Reliability and Redundancy: Enterprise data centers are built with fail-safes, including redundant power supplies, cooling systems, and backups, ensuring near 100% uptime for mission-critical applications.

- Centralized Management: Hosting in data centers allows for centralized monitoring, maintenance, and security management, reducing operational complexity for users.

When it comes to investable companies in the startup space, CoreWeave is a GPU-accelerated cloud provider tailored for AI and ML workloads. The company operates in a pay-as-you-go model, essentially leasing GPU to customers when they require it. Lambda specializes in deep learning compute infrastructure for training AI models. Equinix is a leading provider of colocation and interconnection services, offering scalable, secure data center solutions. Equinix operates data centers worldwide, enabling businesses to store data closer to their customers for reduced latency while maintaining a centralized backbone for heavy compute tasks.

Equinix Data Center

Key Areas of Opportunity in Data Center Investments:

AI and HPC-Focused Data Centers require specialized infrastructure. This opens the door for facilities designed to handle intensive AI and HPC tasks, requiring advanced cooling systems and high-density power supplies. These facilities tend to be physically large in scale. There is need for agility to flex capacity quickly to meet the growing computational demands of AI research and applications. For instance it has been suggested that Graphcore, which designs IPUs (Intelligent Processing Units) for AI, is investing in its own data center architecture optimized for its chips.

Edge Data Centers are smaller facilities located closer to users to reduce latency. They are crucial for applications like autonomous vehicles and IoT devices. Through decentralized processing, these centers handle data processing at the edge to alleviate the load on central data centers and improve response times. One notable example is EdgePresence (now acquired), which deploys modular edge data centers in underserved markets for low-latency applications. VaporIO also builds co-location and edge infrastructure for real-time applications and 5G networks. Mutable, which just raised a Seed round from Samsung NEXT, offers an edge computing platform that transforms ISP resources into distributed data centers.

Another opportunity that has seen overwhelming interest data is bringing Sustainable and Energy-Efficient practices to data centers. Green Energy Integration utilizes renewable energy sources to power data centers, reducing carbon footprints. Compass Datacenters, which has raised north of $300M from Redbird and other institutional investors, builds energy-efficient data centers leveraging renewable energy and modular designs. Innovative Cooling Solutions implement advanced cooling technologies to enhance energy efficiency and lower operational costs. For instance barcelona-based Submer, which just raised $55.5 million at a valuation of half a billion dollars, develops immersion cooling systems to reduce energy usage in data centers. In earlier stages, LiquidStack focuses on two-phase immersion cooling technology for green data center operations.

2. Edge Compute

At the periphery, semiconductors optimized for edge applications bring processing closer to the source of data. Chips in this domain, such as low-power FPGAs, NPUs, or custom SoCs, are designed for real-time inference, enabling applications like autonomous driving, IoT, and industrial automation. These chips prioritize energy efficiency, low latency, and compact form factors.

Some advantages of computing on the edge include:

- Energy Efficiency: Chips designed for edge devices prioritize low power consumption, ensuring longer battery life for devices like IoT sensors, smart cameras, and mobile devices. This is critical for applications deployed in remote or resource-constrained environments.

- Performance Optimization: These processors strike a balance between computational power and energy use, enabling real-time processing without overburdening power resources. Examples include NPUs (Neural Processing Units) and specialized SoCs like Qualcomm Snapdragon for mobile devices.

- Seamless Communication: Semiconductors that enable integrated connectivity (e.g., Wi-Fi, 5G, Bluetooth) allow IoT devices to communicate effectively with central systems and each other. This ensures smooth data transfer in connected ecosystems like smart homes, autonomous vehicles, and industrial automation.

- Scalability: These solutions facilitate large-scale IoT deployments, where millions of devices can operate in sync, powered by efficient semiconductor-driven networks. For instance, chips embedded with CXL or 5G modems enhance both edge and cloud coordination.

- Real-Time Responsiveness: By processing data locally on edge devices, latency is significantly reduced, enabling immediate reactions. This is crucial for applications like autonomous vehicles, where milliseconds can make the difference between safety and failure.

- Reduced Bandwidth Usage: Offloading data processing to edge nodes decreases the amount of data transmitted to the cloud, reducing bandwidth costs and preventing network congestion. This is particularly beneficial in environments with limited or expensive internet access.

- Local Data Privacy: Sensitive data processed locally avoids unnecessary transmission to centralized servers, enhancing privacy and reducing security risks. This is a key strength in healthcare, financial services, and industries handling personal data.

3. Interconnectivity Across the Continuum:

The glue binding data center and edge compute lies in advanced interconnect technologies powered by semiconductors. From optical transceivers that enable fast, long-distance data transfer to chiplets that combine multiple specialized functions in a single package, semiconductors ensure seamless communication and workload orchestration.

Regional Hubs or Mid-Tier Data Centers serve as intermediaries, enabling a hierarchical compute structure that balances latency and capacity. Examples of these include AWS Local Zones and Google Cloud’s Distributed Cloud Edge.

Key areas of opportunity in bridging data center and edge compute:

Hybrid Architectures are platforms that unify compute, storage, and networking across centralized data centers and decentralized edge infrastructures. This enables seamless workload distribution and orchestration across the continuum. Hybrid architectures reduce complexity for enterprises by providing a unified system to manage resources across diverse environments, balancing performance, latency, and cost. Zededa is a startup that offers an orchestration platform for edge computing, enabling deployment, security, and management of distributed edge nodes in hybrid environments. Similarly, Nebulon provides “smartInfrastructure” solutions that integrate edge and on-premises compute and storage with cloud-based management for hybrid environments.

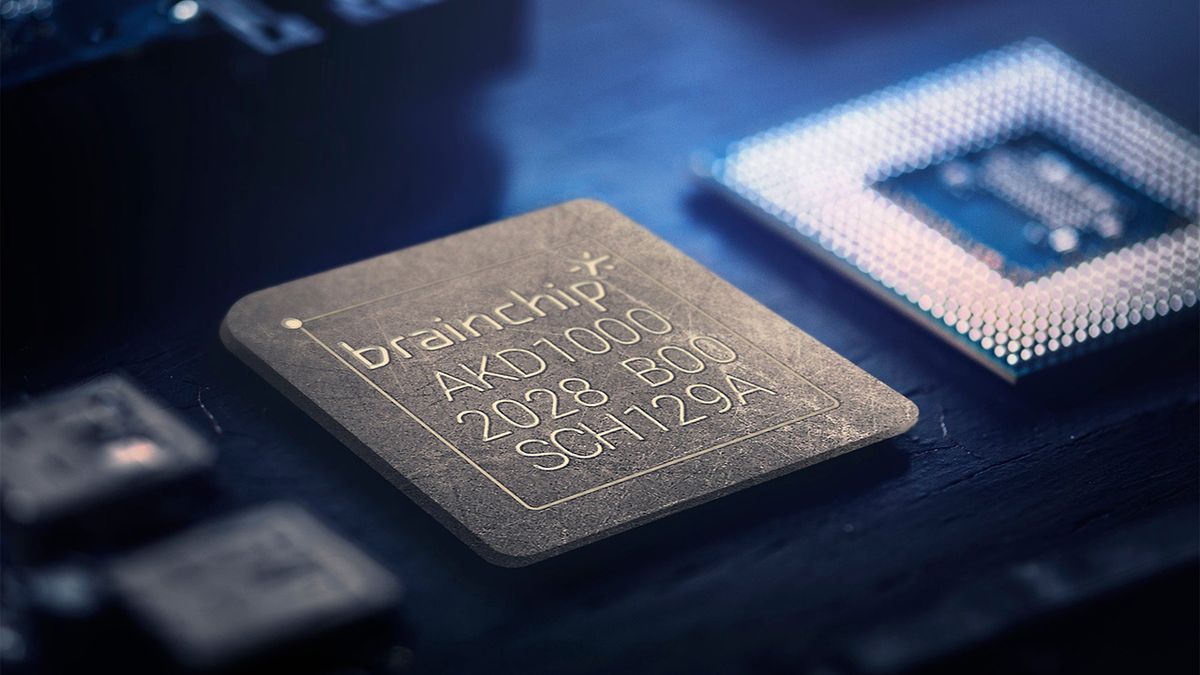

Edge-Accelerated AI is a way to meet growing demand for real-time AI processing at the edge. In this space, startups are designing hardware and software optimized for AI inference and training at distributed locations, while maintaining integration with cloud or data centers. Edge-accelerated AI allows businesses to process data locally for low latency, enhanced privacy, and faster decision-making, which is critical for applications like autonomous vehicles, industrial IoT, and smart cities. For example, Hailo develops edge-specific AI accelerators that deliver high-performance inference processing in a compact, energy-efficient form factor. BrainChip specializes in neuromorphic processors designed to mimic the human brain, enabling ultra-low-power AI inference for edge devices such as surveillance systems and wearables.

BrainChip’s Akida AI Board

Federated Data Management refers to a suite of tools that are leveraged when workloads and data are distributed across edge, regional, and central compute locations. Startups are creating tools to ensure consistent, secure, and compliant data management across this continuum. Federated data management solves the challenges of maintaining data integrity, meeting regulatory requirements, and enabling secure data sharing across hybrid environments. For instance, Cohesity offers data management and backup solutions that extend across on-premises, cloud, and edge environments, simplifying compliance and enhancing security. Cloudera is a startup expanding into hybrid cloud and edge solutions. It provides platforms for managing data pipelines and analytics seamlessly across distributed environments.

4. Dynamic Workload Management:

Semiconductors play a critical role in enabling dynamic workload distribution across the distributed compute ecosystem, optimizing where tasks are processed based on specific needs. AI-powered chips are increasingly used to determine the most efficient location for processing—whether in the cloud, at the edge, or somewhere in between.

Workloads are split along several factors:

- Latency requirements, where real-time applications like AR/VR or autonomous vehicles run at the edge while batch jobs and AI model training occur in centralized data centers

- Data sensitivity, where privacy-critical data is processed locally on the edge and non-sensitive tasks are handled centrally

- Cost optimization, which offloads bandwidth-intensive tasks to regional hubs or central data centers to reduce expenses

Companies such as Pensando Systems provide programmable platforms for edge services, enabling software-defined compute, networking, storage, and security at the edge, further enhancing scalability and efficiency in modern data center architectures. This approach ensures that resources are used effectively across the continuum, meeting the demands of several applications.

Key areas of opportunity in dynamic workload management:

Decentralized Cloud Platforms are platforms that dynamically distribute cloud resources between data centers and edge nodes, ensuring resources are closer to where they’re needed. Decentralized platforms reduce latency and enhance performance by processing data locally when required while leveraging central data centers for heavy lifting. This model is essential for applications like video streaming, gaming, and IoT. For example, Akamai, a pioneer in edge computing through acquisitions, enhances its content delivery networks (CDNs) with edge capabilities to support decentralized workloads. StackPath (acquired by IBM) provides secure edge services such as CDN and edge computing, enabling businesses to deploy workloads close to end-users for minimal latency.

Seamless Orchestration is done by unifying workload management across multiple locations, using tools to dynamically schedule, monitor, and optimize compute resources. Effective orchestration ensures that workloads are handled at the right location (edge or central) based on requirements like latency, cost, or performance, minimizing waste and maximizing efficiency. Red Hat OpenShift, the Kubernetes-based platform, enables developers to build, deploy, and manage applications across hybrid and multi-cloud environments. Mirantis also specializes in multi-cloud orchestration, helping businesses run Kubernetes-based solutions seamlessly across diverse infrastructures.

Hybrid Hardware consists of semiconductor devices and chips optimized for dual environments, therefore functioning effectively in both edge and central data centers. Hybrid hardware bridges the gap between edge and central compute by providing scalable performance and energy efficiency, enabling applications that require both low-latency responses and compute-intensive processing. For instance, NVIDIA develops GPUs such as the A100 and Grace Hopper chips that support both AI inference at the edge and AI training in data centers. Ampere also designs energy-efficient processors that are optimized for edge devices as well as hyperscale cloud environments.

Data Flow Optimization refers to tools and platforms that streamline data transfer between edge and central compute, reducing latency and optimizing bandwidth usage. Data flow optimization ensures efficient use of network resources, reduces operational costs, and supports real-time applications like autonomous vehicles or telemedicine that rely on fast and accurate data exchange. Cloudflare is a platform that offers tools for minimizing latency and optimizing traffic routing across distributed systems, ensuring faster content delivery and reduced bandwidth costs. AMD Pensando – mentioned above – also provides programmable platforms that optimize data flow and connectivity at the edge, enabling efficient and secure interactions with data centers

The intelligent placement of compute is a consideration that hinges several industries. In Autonomous driving, vehicles process sensor data locally (edge), share aggregated data with nearby hubs (regional), and sync insights with central facilities for model retraining. In Smart Cities, IoT sensors handle local data at the edge for immediate responses (e.g., traffic lights) while long-term analytics are conducted in central facilities. In Gaming and AR/VR real-time rendering happens at the edge, while cloud or central data centers host game assets and manage global player interactions.

—————————————–

If you are a builder, investor or researcher in the space and would like to have a chat – please reach out to me at ayla@thelotuscapital.com