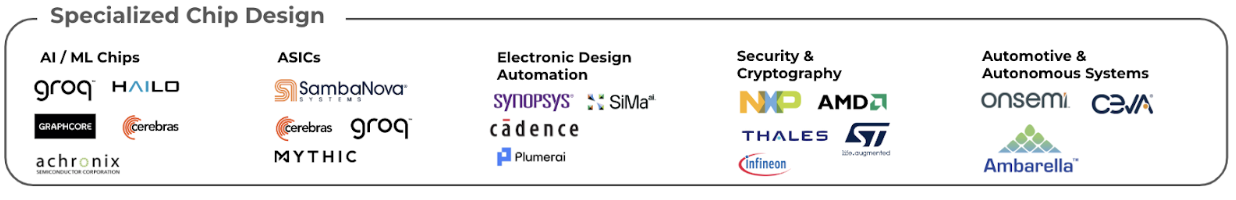

Customization in Chip Design

There has been a departure from general-use chips as the semiconductor paradigm moves towards greater customization and purpose-built chip design. This shift is driven by the need for optimized performance, energy efficiency, and specialized functionalities across various applications.

Customization allows hardware to catch up to the unique demands of advancing technology, and several trends confirm this shift:

1. Emergence of domain-specific architectures for AI and ML

- E.g. GPUs, TPUs, NPUs

2. Rise of ASICs to meet specialized processing needs

- E.g. Crypto mining and AI

3. Modularity, chiplet technology and FPGAs (Field-Programmable Gate Arrays)

4. AI for rapid prototyping and design optimization

5. Specialized chip sectors

- Automotive and Autonomous Systems

- Cryptography

(1) Domain-specific architectures for AI and ML

AI and ML semiconductors are uniquely designed to address the specific demands of artificial intelligence and machine learning workloads, which differ from traditional computing tasks in that they require parallelism, high-speed data processing, and greater energy efficiency.

How do they differ?

AI/ML tasks often involve matrix operations (multiplications, additions), which require handling large datasets in parallel. To optimize for parallel processing, AI/ML semiconductors, such as GPUs, TPUs, and NPUs, are designed with many smaller, parallel cores to process multiple data streams simultaneously. E.g. NVIDIA’s GPUs or Google’s TPUs excel in parallelism, accelerating tasks like neural network training. To accelerate matrix operations, dedicated hardware blocks (e.g., Tensor Cores in NVIDIA GPUs) are included to deliver faster computation than general-purpose processors. AI workloads also require rapid data movement between memory and processing units. These chips incorporate high-bandwidth memory (e.g., HBM2) and advanced interconnects (e.g., NVLink) to minimize latency and bottlenecks. AI tasks — such as model training — can consume enormous amounts of power. Purpose-built semiconductors optimize power efficiency through specialized architectures (e.g., in-memory computing) and reduced precision computation (e.g., using 8-bit or 16-bit floating-point operations instead of 32-bit).

Types of AI chips

- GPUs (Graphics Processing Units):

- Originally for rendering graphics, now widely used for AI/ML workloads

- E.g. NVIDIA A100, AMD Instinct

- TPUs (Tensor Processing Units):

- Designed by Google specifically for AI/ML tasks in TensorFlow

- E.g. Google TPU v4

- NPUs (Neural Processing Units):

- Optimized for neural network tasks, often embedded in mobile devices or edge hardware.

- E.g. Apple Neural Engine, Huawei Ascend.

- Rain AI, a semiconductor startup co-founded by Sam Altman, is developing neuromorphic processing units designed to emulate the human brain. These NPUs aim to enhance energy efficiency and performance in AI computations. The company recently hired ex-Apple chip executive Jean-Didier Allegrucci and the expectation is for Altman to grow a NVIDIA rival in Rain.

- Analog AI Chips:

- Use analog processing for AI inference, providing the benefit of ultra-low power consumption

- E.g. Mythic AI

Rain AI’s Neuromorphic Processing Unit

On Wall Street, AI chips are broken into 2 categories:

- Training focused: optimized for the massive parallel computations required to train AI models, focusing on high memory bandwidth and raw compute power to handle complex datasets and iterative processes.

- Inference focused: Designed for running pre-trained AI models, these chips prioritize low latency, energy efficiency, and fast execution to process real-time data efficiently in applications like voice assistants, image recognition, and autonomous vehicles.

Wall Street analysts look at training chips as the high-margin growth engine in AI hardware, while inference chips are seen as the key to scaling AI deployment across applications. As the winning LLMs crystalize and models tend towards their maximum efficiency, less training is going to happen, and demand will shuffle to bias inference chips. Analysts also watch for companies transitioning from general-purpose chips to specialized solutions, as this signals alignment with market trends and potential for valuation upside.

(2) ASICs (Application Specific Integrated Circuits)

ASICs are custom chips offering optimized performance, efficiency, smaller physical size, and cost-effectiveness compared to general-purpose processors. ASICS are tailor-designed for specific applications, such as cryptocurrency mining or specialized AI tasks.

How ASICs Work

Unlike general-purpose processors that handle a wide range of tasks, ASICs are engineered to perform a particular function or set of functions. By focusing on specific tasks, ASICs can execute operations more quickly and efficiently. For instance, Cerebras Systems, which has raised over $720M, accelerates deep learning through their wafer-scale engines, which integrate numerous cores on a single silicon wafer.

ASICs’ tailored designs reduce unnecessary circuitry, leading to energy savings and because of their energy efficiency, they are crucial for battery-powered devices and large-scale data centers. Lux-backed Mythic uses this to their advantage. The company provides AI inference solutions through analog compute-in-memory technology, embedding processing within memory to achieve high performance and low power consumption. SambaNova Systems, another venerated company in the space, offers an integrated hardware and software platform for AI and data analytics, utilizing custom-designed ASICs to enhance performance and efficiency. SambaNova has raised over $1B from Softbank, GV, and Intel Capital, amongst others.

In ASIC design, eliminating extraneous components results in a more compact chip and reduced physical size. In high-volume production, ASICs can be more economical than general-purpose chips. From a security lens, custom designs can incorporate specific security features, enhancing protection against vulnerabilities.

(3) Modularity, chiplet technology and FPGAs

Modular chip design, which is often referred to as chiplet technology, involves assembling a system-on-chip (SoC) by integrating multiple specialized chiplets within a single package, each dedicated to specific functions. This approach enhances customization and scalability, allowing for the combination of diverse functionalities tailored to specific applications. For example, AMD has implemented chiplets in their Ryzen and EPYC processors to boost performance and scalability. Similarly, Intel is investing in modular designs to improve flexibility across its product lines. NVIDIA is exploring chiplet architectures to advance AI and graphics processing capabilities, while Arm is developing multi-chip modules for custom silicon solutions. In the automotive sector, Bosch and Tenstorrent are collaborating to standardize automotive chips using chiplets, aiming to reduce costs and accelerate development.

On the startup front, Eliyan develops high-performance chiplet interconnect solutions for efficient multi-die integration. Seoul-based Rebellions is an AI chip startup collaborating with Arm, Samsung Foundry, and ADTechnology on next-gen AI computing. Canadian Tenstorrent develops high-performance AI processors and collaborates with Bosch on automotive chip standardization.

Field-Programmable Gate Arrays (FPGAs) are reconfigurable integrated circuits that can be programmed post-manufacturing to execute specific tasks, offering flexibility for evolving computational needs. Unlike ASICs, which are designed for fixed functions, FPGAs consist of configurable logic blocks (CLBs), programmable interconnects, and input/output (I/O) pins interconnected through a programmable routing fabric, allowing for the creation of custom digital circuits. This reprogrammability enables FPGAs to adapt to new tasks or optimize performance as requirements change, making them suitable for applications like AI workloads. For instance, Intel’s Agilex FPGAs exemplify this adaptability.

Eliyan Chiplet

Several startups are leveraging FPGA technology to deliver innovative solutions. Flex Logix provides eFPGA (embedded FPGA) technology for integration into SoCs, offering reconfigurability. M12-backed Syntiant develops ultra-low-power AI processing solutions using FPGAs for edge applications. Enflame Technology, which has raised over $400M from Tencent and Alibaba, focuses on AI acceleration using FPGA platforms in data centers.

(4) AI-powered chip design

The use of AI and machine learning in the chip design process allows for rapid prototyping and optimization, leading to more efficient and customized chips. These tools employ machine learning to optimize digital design flows, enhancing performance and efficiency.

AI-powered chip design involves training models on vast datasets of existing chip designs and performance metrics. These models learn to predict the outcomes of various design choices and can generate new designs that meet specified criteria. Techniques include:

- Generative Adversarial Networks (GANs): Used to create new design layouts by learning from existing examples.

- Reinforcement Learning: Models learn to make a sequence of design decisions that lead to optimal performance.

- Natural Language Processing (NLP): LLMs can interpret design specifications written in natural language and translate them into design parameters.

Several startups are developing tools to streamline chip design workflows. ChipStack Utilizes AI to significantly reduce chip design cycle times, aiming for designs in under a month with minimal personnel. Synopsys is a leader in EDA tools, providing software and IP for designing and verifying complex chips. The startup offers products including a Fusion Design Platform that helps streamline chip development and optimization. Cadence offers tools for IC design, simulation, and verification, with solutions like the Virtuoso and Allegro platforms. The company recently integrated AI tools like Cadence Cerebrus to automate and optimize chip design workflows.

(5) Specialized Chip Sectors

Specialized chip sectors address unique, sector-specific demands in industries including security, cryptography, and automotive systems. In security, chips like Hardware Security Modules (HSMs) and Trusted Platform Modules (TPMs) provide hardware-based encryption and key management solutions, ensuring data integrity and protection across devices and networks. These chips play a vital role in industries such as finance, healthcare, and defense, where secure data handling is paramount.

In automotive, specialized chips revolutionize how vehicles operate and interact with their environment. Advanced Driver-Assistance Systems (ADAS) chips enable essential features such as collision avoidance, lane-keeping, and adaptive cruise control, enhancing safety and convenience. Meanwhile, sensors and LIDAR technologies rely on high-performance chips to process real-time data, enabling precise navigation and decision-making for autonomous vehicles.

—————————————–

If you are a builder, investor or researcher in the space and would like to have a chat – please reach out to me at ayla@thelotuscapital.com